7 High-Impact BigQuery Optimizations To Reduce Your Cloud Bills

BigQuery pricing eating into your cloud budget? These simple tips will help you lower your GCP costs.

Optimization Suggestions

BigQuery’s power and scalability come with costs that can quickly escalate. While basic SQL optimizations help, these seven BigQuery-specific strategies deliver the highest ROI for reducing your GCP spend.

Tip: Don’t miss the Bonus section at the end.

1. Table Partitioning and Clustering

Partitioning divides your tables into smaller segments based on a specified column (typically date), while clustering organizes data within partitions. Together, they dramatically reduce the amount of data scanned during queries.

For time-series data, partition by date. For location data, consider ingestion-time partitioning with clustering on location fields.

2. Reservation vs On-Demand Pricing

BigQuery offers two pricing models:

On-demand: Pay $6.25/TB processed

Flat-rate: Purchase dedicated slots - based on processing capacity

* Enterprise and Enterprise plus pricing is lower with more committed workloads.

For predictable workloads exceeding 190TB/month, flat-rate pricing often delivers substantial savings. Organizations with steady query volume typically see 30-60% cost reduction when properly sized. Use the BigQuery Calculator to determine your crossover point.

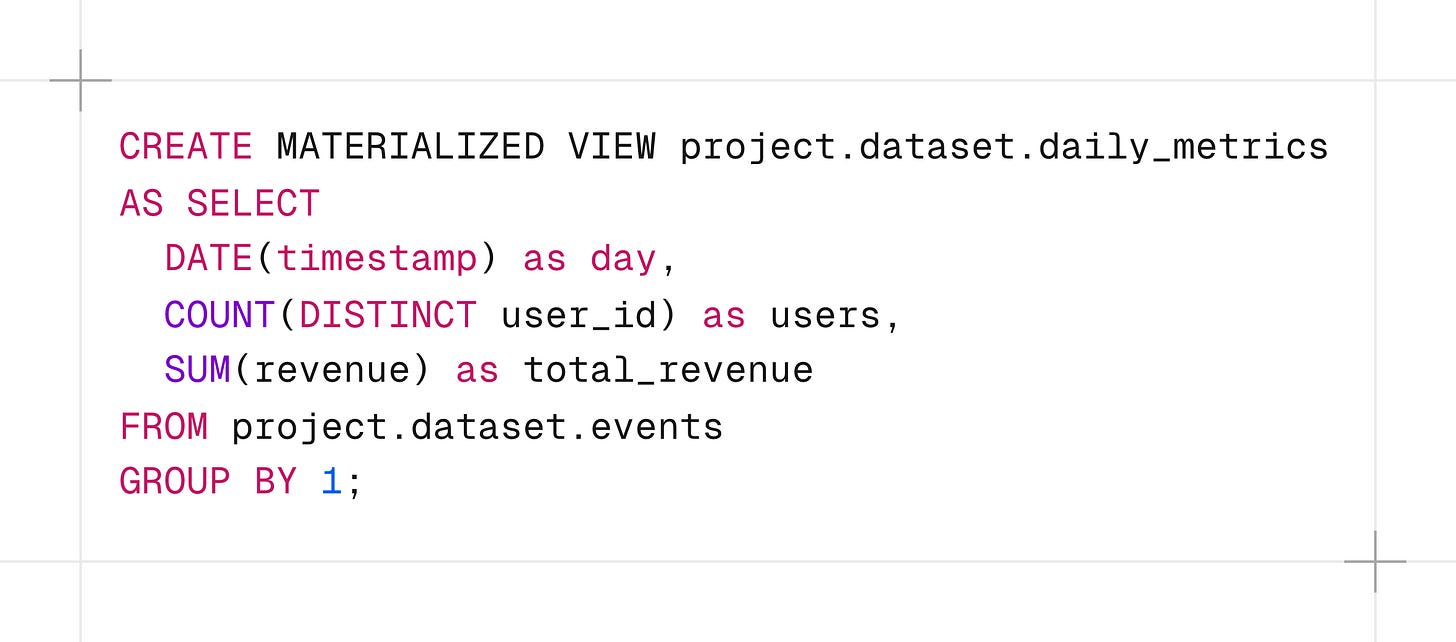

3. Materialized Views

Pre-compute and incrementally maintain results of complex queries that run frequently.

BigQuery automatically uses materialized views when they can accelerate queries, even when not explicitly referenced. This can reduce processing costs by 40-80% for frequent analytical queries.

4. Query Caching

BigQuery automatically caches results for 24 hours. Identical queries use the cache with zero cost.

Some things to keep in mind about BigQuery Caching:

Cached results are user-specific - Use Service Account Impersonation1.

Tables modified since the original query invalidate cache.

For reporting workflows, structure queries to maximize cache hits and eliminate costs for all but the first execution.

5. Cost Controls and Monitoring

Implement these controls to prevent unexpected expenses:

Project-level quotas to cap daily spending

Custom cost monitoring dashboards:

Budget alerts at 50%, 75%, and 90% thresholds

IAM roles to restrict who can run expensive queries

6. Dry Run as a Habit

Before running a complex query, especially one that involves a large dataset, use the "Dry Run" option in the BigQuery UI or in code2. This feature estimates the cost of the query without actually executing it.

Make it a habit to Dry Run all potentially expensive queries. This allows you to identify potential issues and optimize your query before you incur unnecessary charges.

7. Order in WHERE Clause

The order of conditions in WHERE clauses significantly impacts performance. BigQuery evaluates conditions sequentially - place the most restrictive filters first.

When the city filter eliminates 99% of data and the date filter eliminates 10%, putting city first can reduce processing by 10-50%.

Bonus

These additional techniques deliver smaller but still meaningful savings:

Data Type Alignment: Explicitly cast literals to match column types

LIMIT for Exploration: Always use LIMIT when exploring data

Avoid Leading Wildcards: Use

LIKE ‘prefix%’instead ofLIKE ‘%suffix’APPROX_COUNT_DISTINCT: Use for large datasets when exact counts aren't necessary

Table Expiration: Set automatic expiration times on temporary tables

JOIN Optimization: Ensure proper join conditions and join order

EXPLAIN: Analyze query plans to identify bottlenecks

Combining these strategies can reduce your BigQuery costs by 60-80% while maintaining or improving query performance.

If you enjoyed this listicle on BigQuery optimizations, consider subscribing to Stackgazer for more thoughtful analysis at the intersection of technology, philosophy, and human experience.

https://cloud.google.com/docs/authentication/use-service-account-impersonation

https://cloud.google.com/bigquery/docs/samples/bigquery-query-dry-run